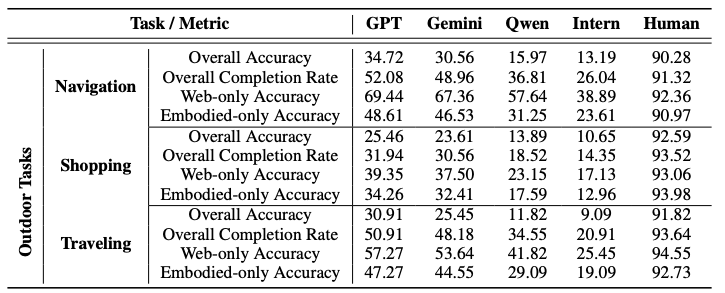

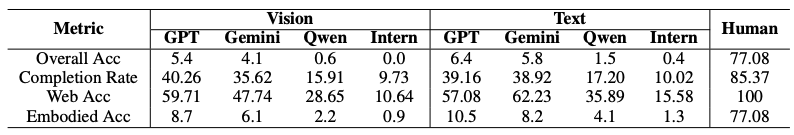

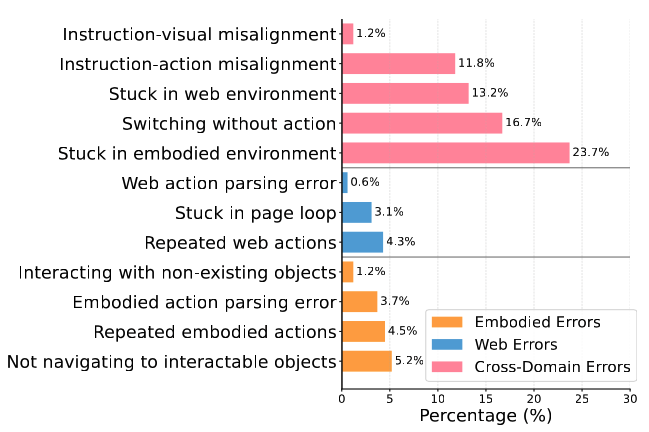

Our analysis reveals that the primary challenges in embodied web agents lie not in isolated capabilities, but in their integration across domains.

1

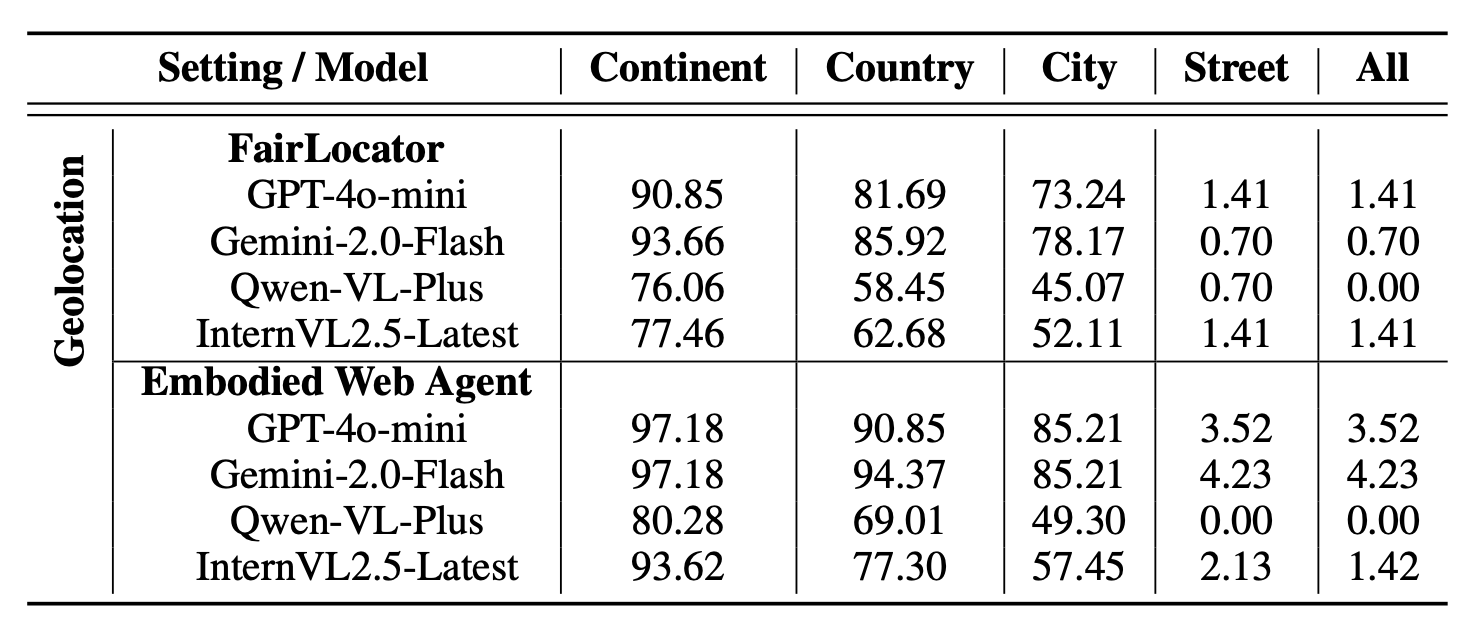

Domain Integration Challenge: Cross-domain errors (66.6%) far exceed individual domain errors, indicating that seamless integration between physical and digital realms remains the primary technical challenge.

2

Single-Domain Traps: Agents frequently become stuck in repetitive cycles within one domain, failing to effectively transition between embodied and web interactions when required.

3

Relative Domain Performance: While both embodied (14.6%) and web (8.0%) errors occur, their individual rates are significantly lower than cross-domain failures, suggesting competency in isolated tasks.